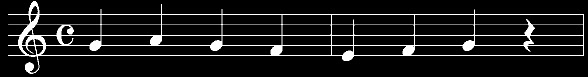

Figure 1. First 2 measures of "London Bridge Is Falling Down"

Fig. 1 shows the sheet excerpt that was used for this activity. These two bars contains 4 elements: the G-clef, the time signature symbol, the quarter notes, and quarter rests. We need not concern ourselves with the first two, since I will only be extracting a simple monotonic sound and not a full blown sound complete with timbre and accent. To have a distinction between the quarter notes and rests, I used template matching by correlation that we tackled in Activity 6.

Figure 2. Thresholded image after correlation with a quarter note image

Figure 3. Thresholded image after correlation with a quarter rest image

Figure 4. Combined image of the quarter notes and rests positions

After the correlation, I thresholded the resulting images so that only the brightest spots remain. This means that I am selecting the region that is most correlated with my pattern image. Fig. 2 and 3 shows the result for the quarter notes and rests. Fig. 4 shows the combined image for notes and rests. The sequence of Fig. 4 is in reverse, which means I have to rotate it by 180 degrees to acquaint it with Fig. 1. This is due to the fftshift(). That fact is taken into account for all the sorting that I have done in this work.

From this, I have properly identified the coordinates of the spots that correspond to quarter notes and quarter rests. This showcases the ability of the code to distinguish entities found in the music sheet. In assigning the specific notes, I do a for loop for the various x ranges (height in the image) because this identifies the specific note value of our quarter notes. For the rests, the height range is not important since they don't have a frequency value, only a time value. This translates to a dependence for its L-R placement, but not the height placement, unlike the notes that needs both. To simulate a pause, I use 44 kHz since this is well beyond the range of human hearing. Sequencing the results finally generates the melody:

It is now easy to improve this code since I have already generalized its identification capabilities. Further work is needed in automatizing the x range selection for the note values.

Self-Assessment: 10/10

Self-Assessment: 10/10

No comments:

Post a Comment